Data Preparation and Usage for Common Data Set and External Surveys

Abstract

To increase efficiency and lower the risk of human errors for all offices involved, the Mānoa Institutional Research Office (MIRO) streamlined and automated the data preparation process for the Common Data Set (CDS) and many external surveys’ data collection and reporting, minimizing the reporting burden while improving data accuracy and consistency.

Introduction

There are two important standardized data collection systems in the United States: the Department of Education’s Integrated Postsecondary Education Data System (IPEDS) and the Common Data Set Initiative (CDS). The Common Data Set is a set of questions and reporting standards created by a few external data publishers that aim to improve the quality of information provided by colleges and universities. Over the years, it has helped relieve a lot of the reporting burden for universities caused by similar but different questions asked by different publishers. The CDS also collects a lot of helpful university data that is not often included in IPEDS surveys and hence serves as an important data resource if anyone needs to collect comparable data from different institutions.

When looking for colleges or graduate programs to apply to, prospective students often research major publishers like the U.S. News and World report and the College Board to find information. In other words, data submitted through the external survey by the institutional research office will be used by college guide publishers and university rankings and can impact university recruitment and marketing efforts.

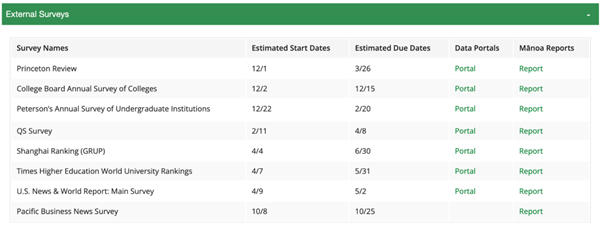

Different institutions may choose to participate in different external surveys. Here at UH Mānoa, we help prepare for about a dozen external surveys each year (see Figure 1). To save time when needing to quickly access various data entry portals, or when checking how our university is presented on external publishers’ websites, MIRO maintains a list of key external surveys including links and important dates. This list also serves as a reminder to the team when to expect a survey to arrive and when the survey window closes, which saves a lot of MIRO’s time. To help people quickly locate the CDS reports, MIRO publishes the most recent 5 years of CDS reports on MIRO’s website under the “Report” section.

(Corresponding Video Here)

Like many other IR offices, the Mānoa Institutional Research Office (MIRO) calculates data and collects information from many offices across the university to compile those reports. Over the years, MIRO has streamlined the data preparation process for CDS and other external surveys which, in turn, has helped to minimize the reporting burden and improve overall data quality.

Consolidating Questions to Improve Efficiency

In an effort to find ways to improve efficiency, MIRO first classified two main types of work that the office engages with for the Common Data Set and external surveys. The first type of work includes calculating data using the university’s database, and the second is about collecting information from offices across the campus.

(Corresponding Video Here)

Institutional research offices can calculate some of the data requested by external surveys, but the rest can only be answered by other officers. Reaching out for answers each time IR receives a new survey will only generate massive email threads, which could be quite distracting for both IR and other offices. Since the CDS and external surveys mostly remain the same from year to year, a lot of questions can be consolidated and sent to the offices at the beginning of the fall semester to collect answers. This way, the consolidated questions are sent to different offices in one fell swoop and offices are only contacted to answer a limited number of new questions included in the new surveys.

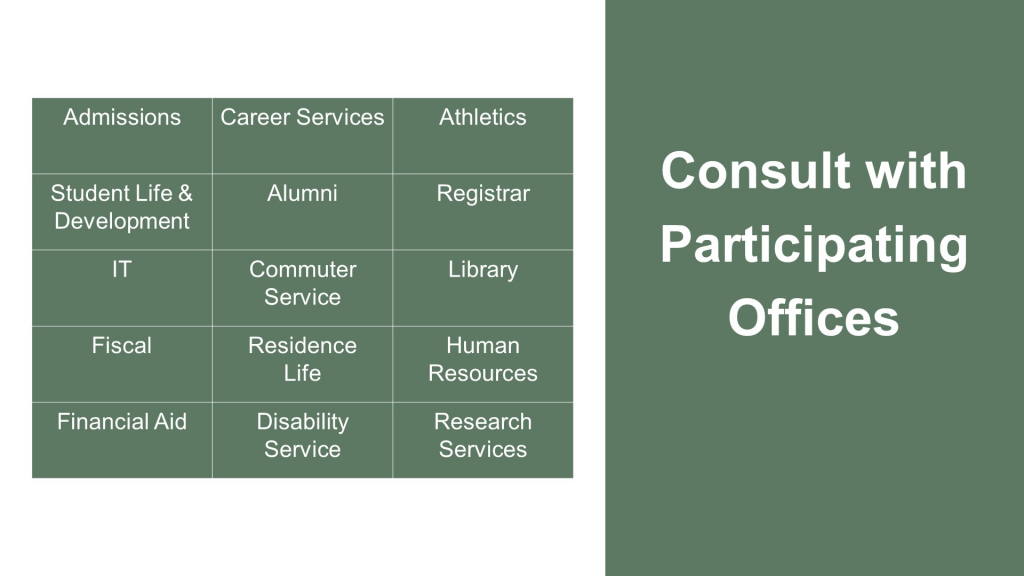

This is a completely new process and it’s important to inform other offices so they fully understand the purpose of the new process and how it works. Before implementing the new procedures, the MIRO staff met with all 15 participating offices to review the consolidated questionnaire and the new collection method together.

(Corresponding Video Here)

While this new method helped save time, there was still frequent back-and-forth email communication because MIRO was collecting information from offices separately. This ran the risk of missing emails or saving data in the wrong folder. On top of that, if offices didn’t answer the questions by the deadline, it took a lot of extra time to repeatedly contact them for the information.

After examining the situation, we realized there were two bottleneck issues that prevented the office from gaining more efficiency: (1) manually downloading and saving data and (2) having to communicate with each office on a one-on-one basis.

(Corresponding Video Here)

The Manual Procedure (Bottleneck Issue 1)

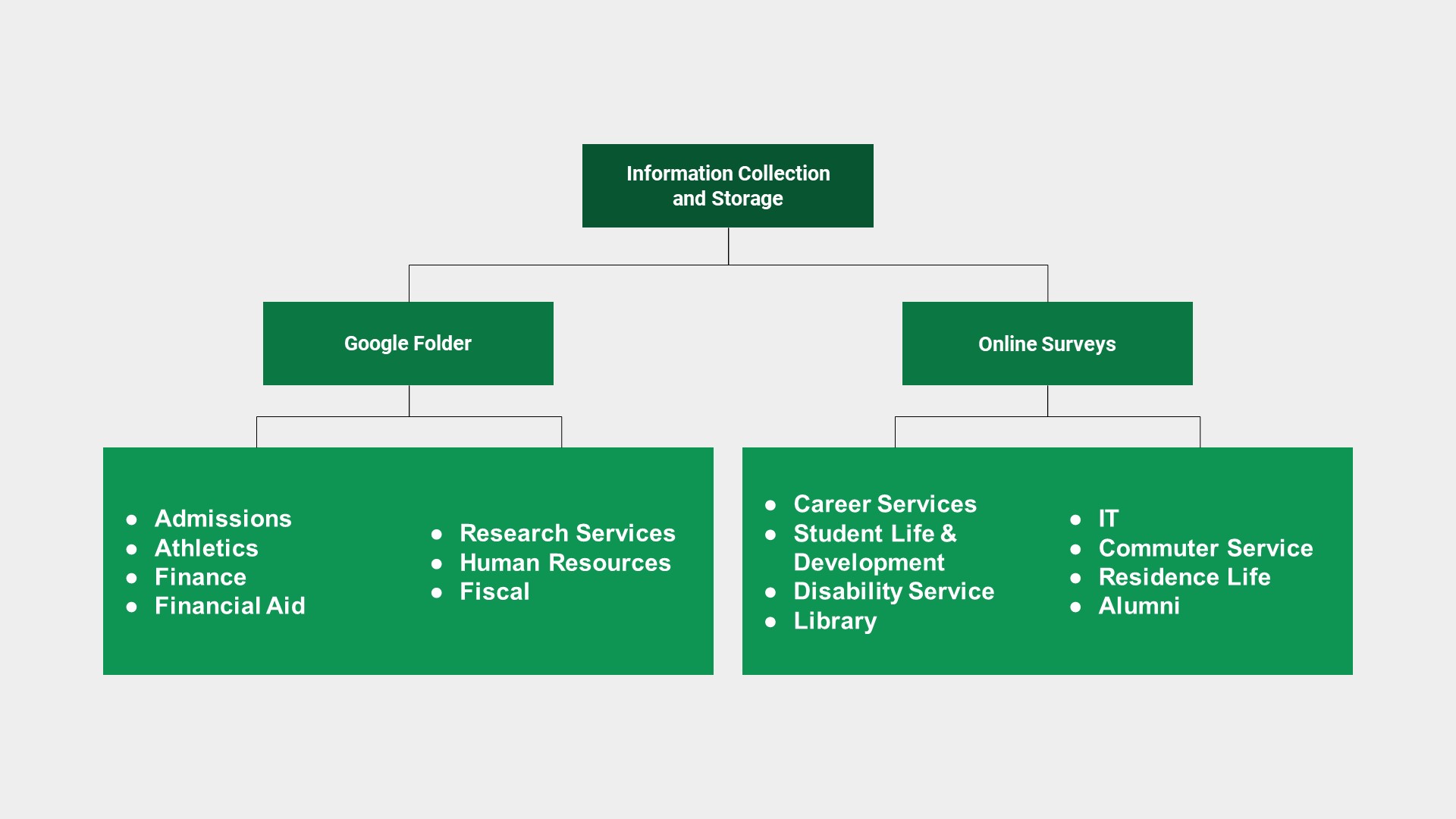

In order to eliminate the manual procedure, we first needed to transform the consolidated dataset into a format that offices could easily enter and upload their answers to. Downloading and saving data can look different depending on how much information or data the offices need to provide each year, so MIRO classified offices into two types: offices with minimal data entry and offices that provide greater amounts of data (see Figure 5).

(Corresponding Video Here)

For offices that usually make minimal changes to their answers typically remain the same year after year. MIRO created an internal consolidated questionnaire for external surveys by transferring all the separated questions into an online survey through SurveyMonkey. The offices that access these surveys the most include Career Services, IT, Commuter Service, Residence Life, and more.

(Corresponding Video Here)

The consolidated survey begins by asking participants to provide their name and contact information to help keep track of who fills out the information over the years. To help offices prepare for answers, the previous year’s answers are provided after each question. If offices ever need to make changes, they can simply put the new answer in a comment box below.

(Corresponding Video Here)

On average, it takes most offices less than ten minutes to review the consolidated survey, which encourages offices to provide data to MIRO on time. Record goes to show that over 70% of offices often complete the survey before MIRO sends out any reminder email.

In the past, MIRO used to compare the current year’s answers with the previous year manually, which was a tedious process and ran the risk of accidentally missing new answers. By using this process, the new information entered by offices can be easily found which, in turn, makes MIRO’s updating process much faster and more efficient.

Inter-Office Communication (Bottleneck Issue 2)

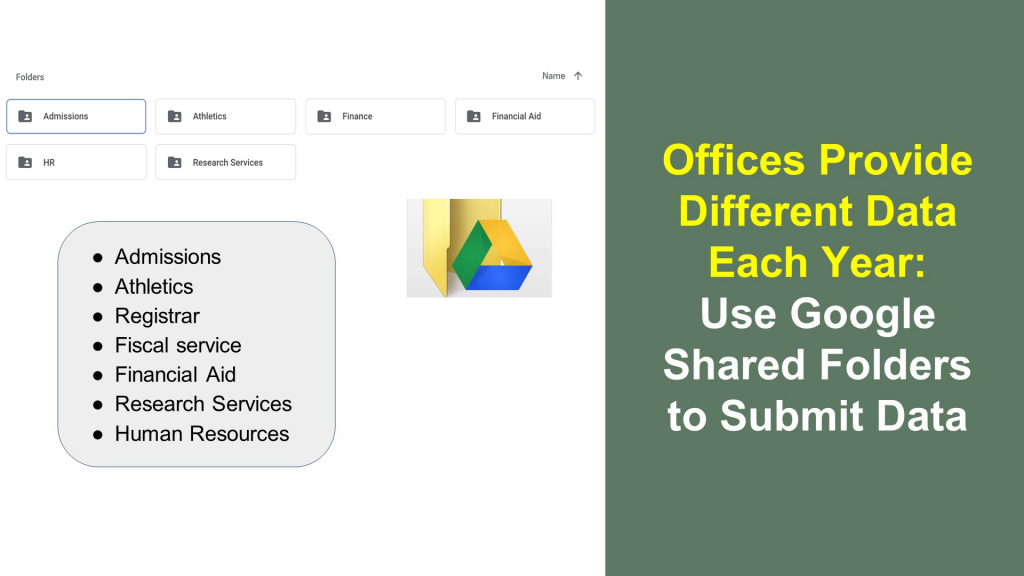

For offices that need to provide greater amounts of data each year, it’s much easier for them to use excel spreadsheets or word documents instead of entering data on an online survey. MIRO consolidates the information by creating Google shared folders for each office to access the files they need and to input their own updated data. Links to their respective files are also included when sending out data collection emails. This enables the office to eliminate the manual process of transferring files through emails and also saves a lot of time. Such offices include the Admissions’s Office, the Athletics Department, the Registrar’s Office, and more.

(Corresponding Video Here)

The Google folder includes a data entry guide with instructions on how to enter the data, an archive folder that has completed data sets from previous years for reference, and a blank consolidated data set for new data entry.

(Corresponding Video Here)

To avoid accidental data changes and deletions, offices are only allowed to edit files during the data collection window. Once uploaded by the offices, the data files are moved to an archive folder and are no longer available for editing. Using both online surveys and Google shared folders lowers the risk of human errors. This system saves time and relieves stress for so many people involved.

To guide the staff through the year-long data collection from other offices, MIRO created a timeline showing when to collect what information. This collection is based on when data are available and when external surveys are released (see Figure 10).

(Corresponding Video Here)

In the year-long data collection cycle, the fall collection gathers most of the data. To help expedite the process, MIRO has email templates that are sent out at specific times (see Figure 11) In late July, offices are asked to confirm or update their data provider’s contact information. This email also serves as a heads-up to offices that the upcoming data collection cycle will begin soon. In late August, MIRO sends out an email notifying offices that the data collection window is open and that they have six weeks to submit their answers. The first reminder is sent out four weeks into the collection window. If offices still do not respond, a second reminder email is sent around the fifth week.

(Corresponding Video Here)

Following this new process, communicating with offices to collect information is no longer a time consuming task for MIRO. Using the email schedule also helps both IR and other offices stay organized and eliminates as many surprises and distractions as possible.

(Corresponding Video Here)

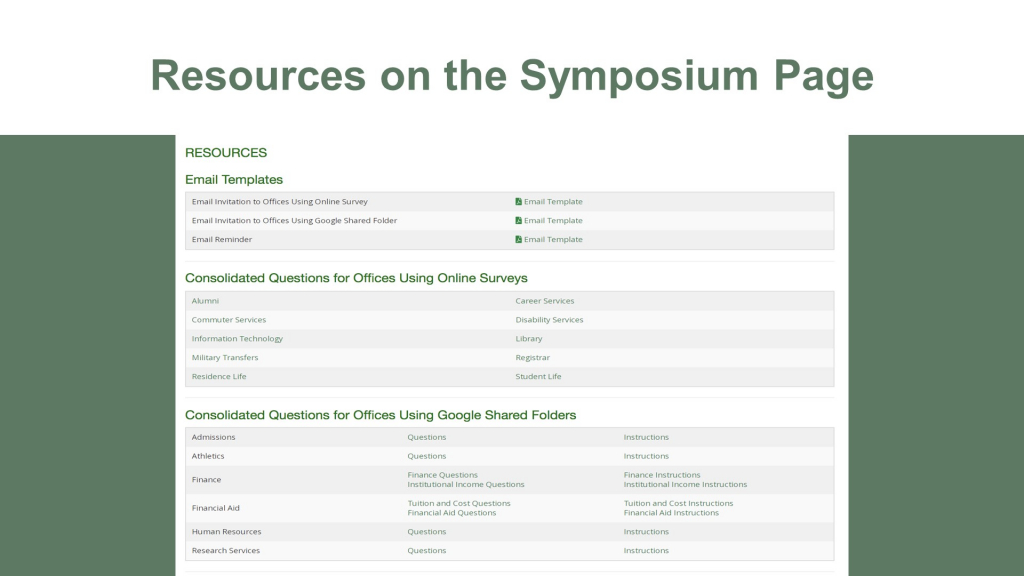

In light of everything shared, MIRO wanted to make those created files, such as the consolidated questionnaire and email templates, available to the IR community.

(Corresponding Video Here)

Automating Data Processing and Calculation Methods

MIRO’s main goal is to improve the efficiency, consistency, and accuracy of the data preparation process. When talking about the efficiency of institutional research, automation is key. Over the years, MIRO explored ways to automate the data preparation process and consolidate hundreds of questions from the CDS and additional external surveys.

(Corresponding Video Here)

When starting the automation process, MIRO examined the questions from every survey the university participated in and identified new data variables that could be pre-calculated and stored in a centralized database, using both MySQL and SPSS syntax so that readily reported data is available in both SPSS and the office’s virtual database. Next, MIRO created pivot tables in Excel that are designed to answer questions as shown in different CDS or external surveys. By doing so, the tedious manual process in data preparation was eliminated.

(Corresponding Video Here)

The Times Higher Education World University Ranking (TIMES), for example, asks universities to report data based on a set of specific discipline classifications. It would be too time consuming to map the departments and majors each time, which is why MIRO used MySQL to create a new variable called “times subject” that maps the university’s data based on each ranking’s requirements. Each year, MIRO’s staff simply needs to run the codes to generate new data variables. The Excel pivot tables MIRO created can then use the new variables to create stats in the exact format that can be used in TIMES’ survey. It took some time to set things up, but now MIRO staff only need to refresh the data source and the data will be ready for submission within seconds.

By using SPSS, MySQL, and Excel, and by creating online surveys and Google folders, MIRO is now able to automate a lot of data calculation and information collection procedures. The time saved with improved efficiency enables MIRO’s small team to take on multiple tasks while still being able to conduct new and innovative projects.

Closing Remarks

Many publishers, rankings, and other organizations request that universities answer their external surveys, which could be extremely overwhelming, especially for IR offices with only one staff. Universities can decide which external surveys to participate in, so we recommend that institutional research offices conduct a realistic review of the data preparation workload and meet with their admission’s offices to go over the external surveys together. Asking which surveys may have the most influence on prospective students’ decisions can help universities decide which surveys are worth participating in.

To IR offices that are interested in taking a similar automation approach for their CDS/External Survey preparation process, we suggest starting with exploring ways to consolidate questions from different surveys before collecting the data at one time from the data provider offices. Offices can also explore ways that allow the data-providing offices to upload data into designated virtual locations while avoiding manually downloading and saving data. Those are the easiest first-steps to take and cut a lot of time for the data coordinators.

(Corresponding Video Here)

Another noteworthy mention is about the free CDS data entry offered by some external surveys, such as U.S. news, Peterson’s, College board, and Fiske. To help reduce data entry work for institutions and to increase the number of institutions participating in their data collection, many external surveys offer to enter CDS data using the updated CDS form provided by the institutions. Institutional researchers can always ask if such service is provided, but please be aware that those organizations usually request that institutions be responsible for reviewing and verifying all data entered, and of course, IR offices will still need to enter the non-CDS data themselves.

A successful CDS/External Survey data coordination depends on whether other offices on campus can provide timely and accurate information. Here at MIRO, we learned from our practice that if we can think in our collaborators’ shoes to make the work process as user-friendly as possible, then offices are more likely to provide high quality data in a timelier manner. Some of such efforts MIRO made included reducing unnecessary email communication, consolidating questions from different external surveys, giving offices sufficient time to prepare for their answers, creating processes to make it easier for offices to provide their answers, and providing sufficient information such as data explanation and previous year’s data.